ByteDance’s (字节跳动) latest AI push has landed the company in the firing line. The trouble centres around Seedance 2.0 (即梦AI), an AI video generator with the power to turn short text prompts into highly realistic clips. Within days of launch it was also generating something else: complaints from Hollywood.

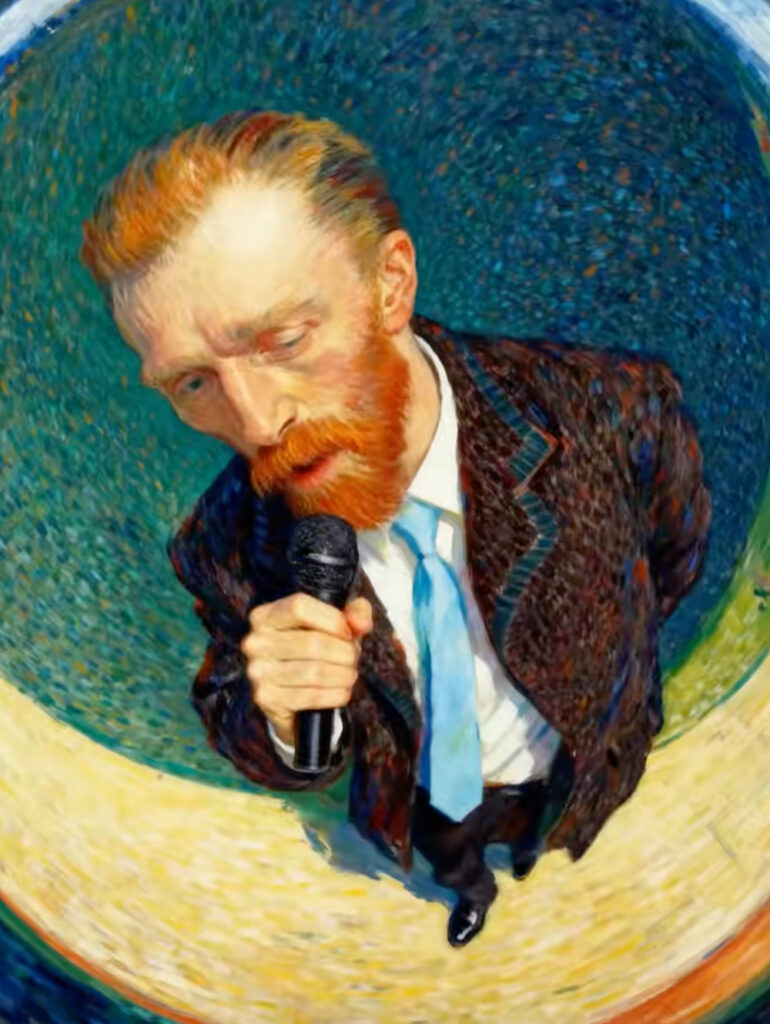

At the centre of this issue is realism. Seedance 2.0 can produce content that resembles known actors, film scenes and recognisable IPs with minimal input. That capability has triggered accusations from major studios – including The Walt Disney Company, or Disney to you and I – that the tool enables copyright infringement and unauthorised use of likeness.

Industry bodies have moved quickly. The Motion Picture Association has reportedly raised concerns about how such models are trained, while SAG-AFTRA has reiterated its stance that performers’ likenesses and voices cannot be replicated without consent or compensation. The tension is familiar, but the flavour is new. This is not crude deepfake territory – it’s near-production quality output at scale.

ByteDance, for its part, signalling caution. The company says it ‘respects intellectual property rights’ and has begun tightening safeguards and restricting certain uploads. They’re also working on stronger content controls. But it’s the same story as with all generative AI systems: the challenge sits upstream. How the model was trained and whether copyrighted material or identifiable likenesses were included in that process is what really matters.

Timing matters. Generative video is fast becoming the next hot zone in AI, following text and image models. If tools like Seedance 2.0 can compress production timelines from weeks to minutes, they also compress the legal grey areas that come with them.

For now, that familiar pattern holds. A powerful new model launches. It goes viral. Then comes the reckoning. The difference this time is how close the output is to the real thing.